Component

SupremeRAID™ BeeGFS™ Performance with GIGABYTE Servers

The white paper explores how SupremeRAID™ with GIGABYTE S183-SH0 creates an extremely dense and efficient parallel filesystem solution and enhances the performance of BeeGFS—making it ideal for High-Performance Computing and Artificial Intelligence applications.

Executive Summary

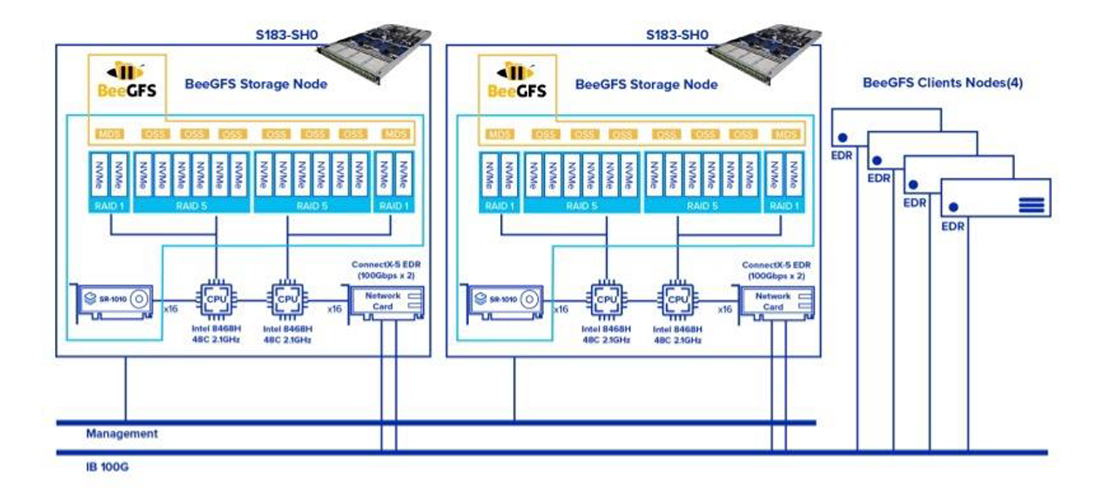

1. Two sets of twelve 7 GB/s SSDs configured as four RAID 5 groups. 2. Four 100G Ethernet links for a total of 400G.

Testing Background

Cluster Architecture

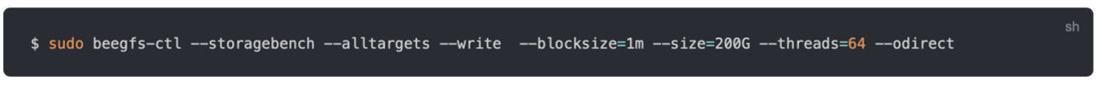

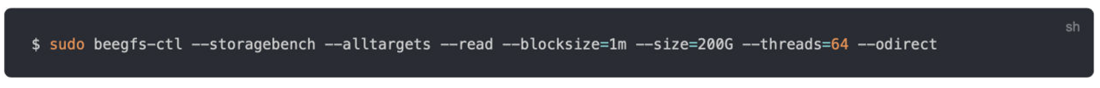

Testing Profiles

Testing Results

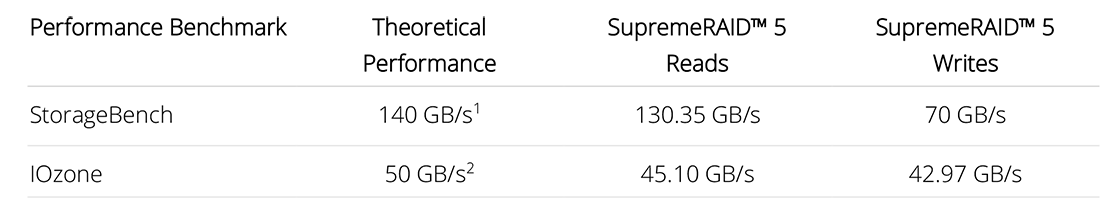

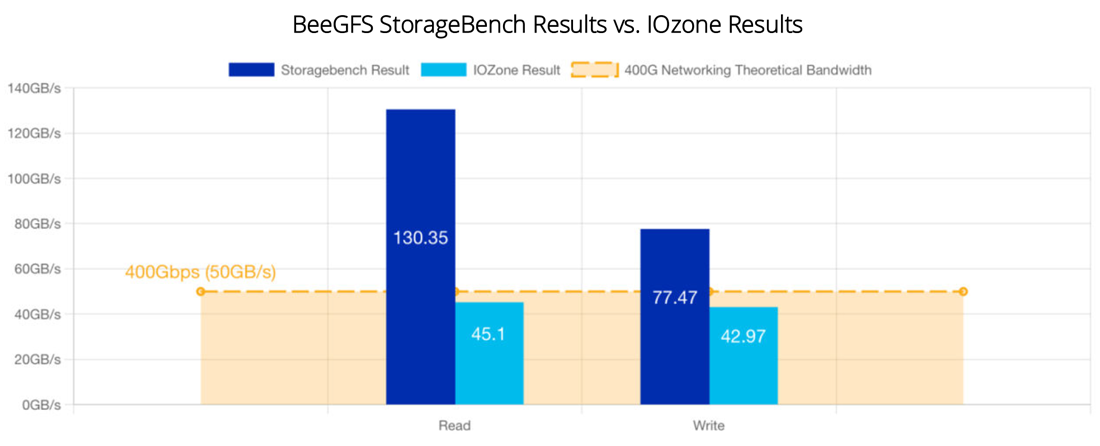

BeeGFS StorageBench Results vs. IOzone Results

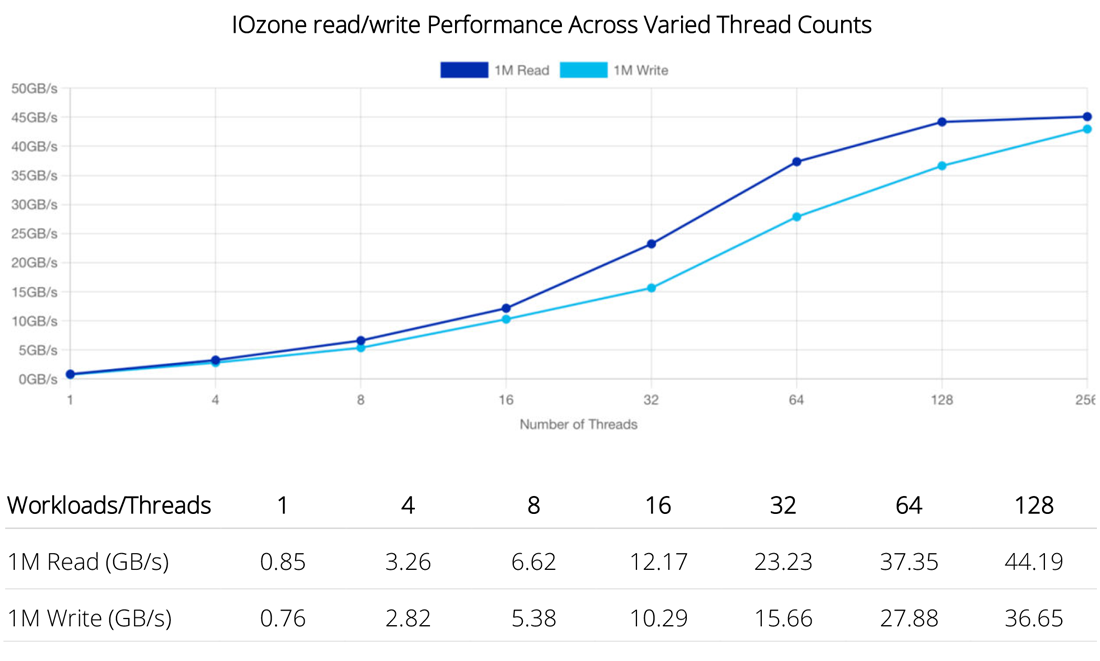

IOzone read/write Performance Across Varied Thread Counts

Summary

Conclusion

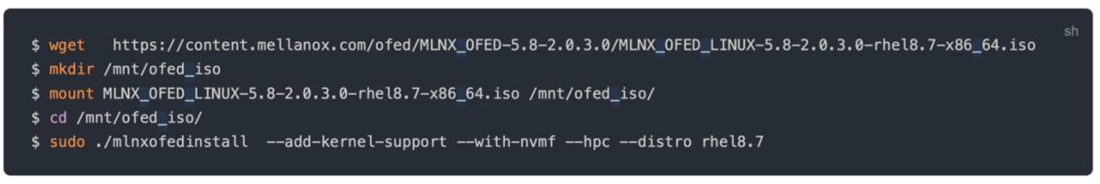

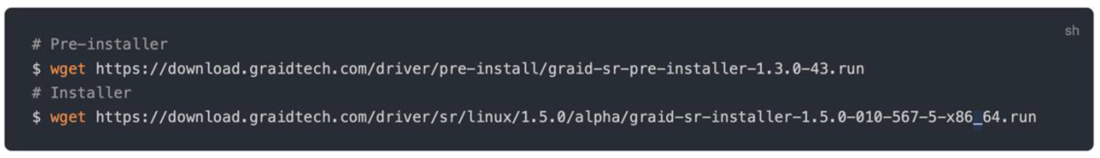

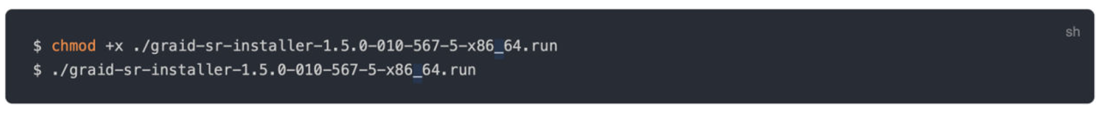

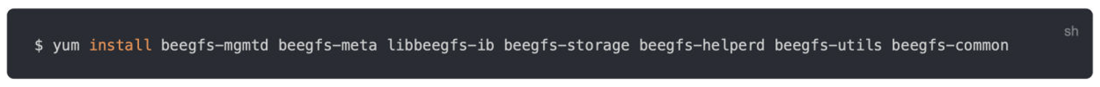

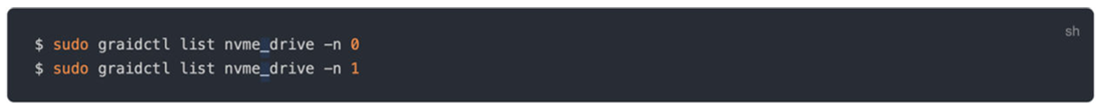

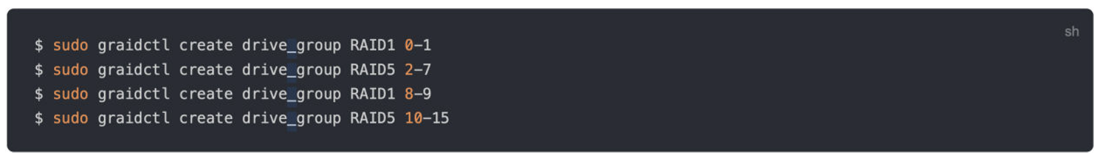

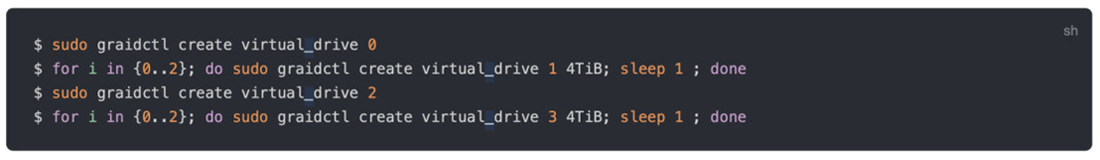

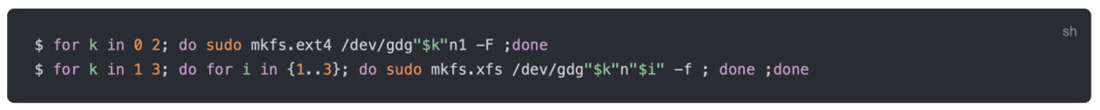

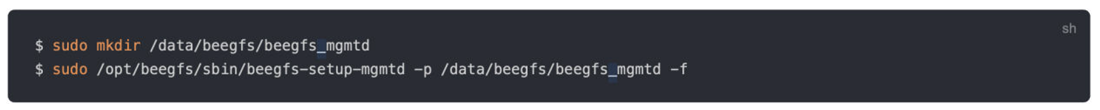

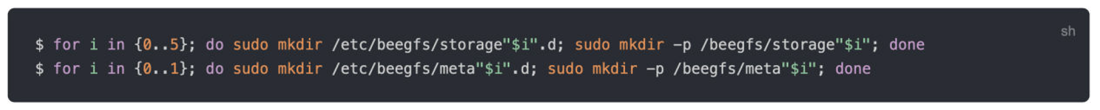

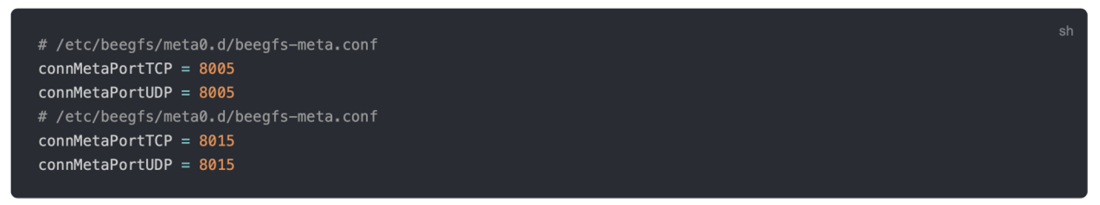

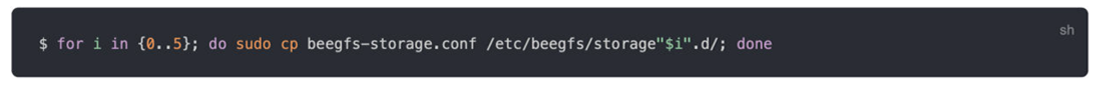

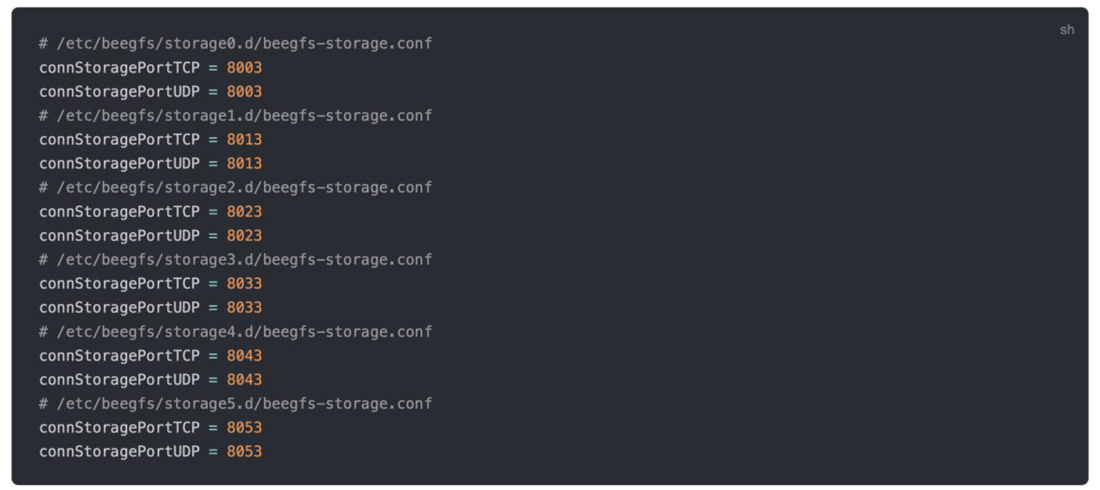

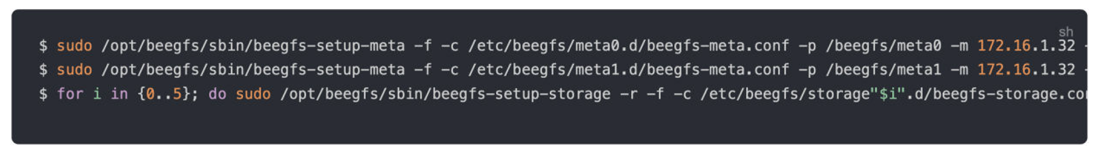

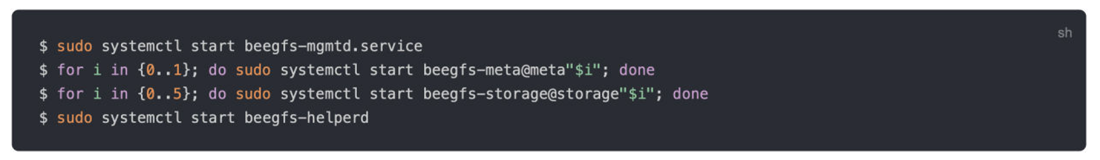

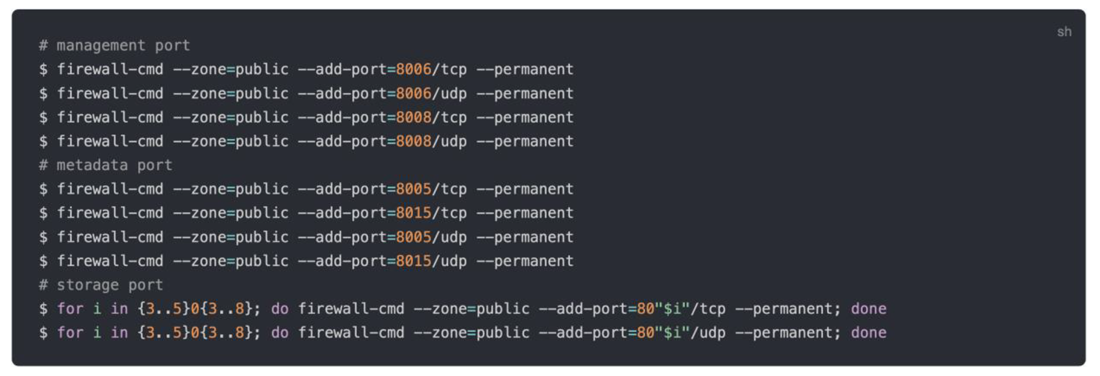

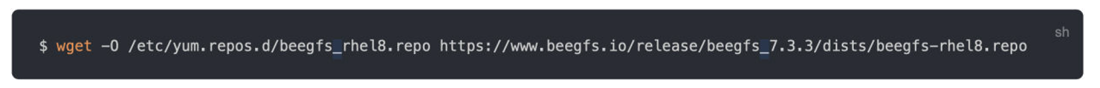

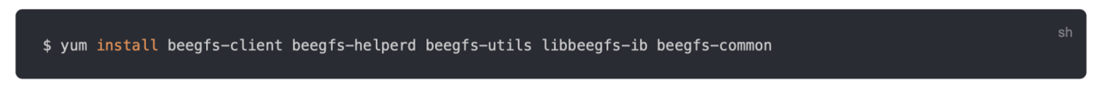

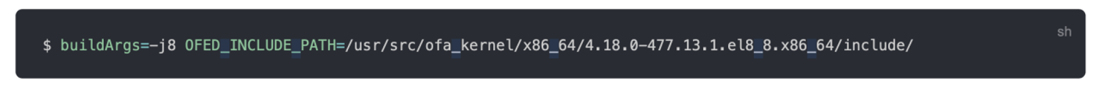

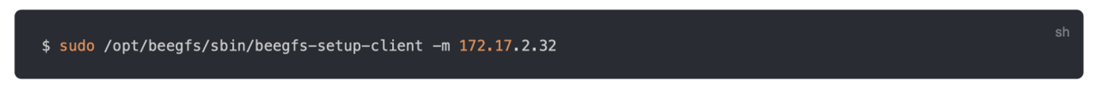

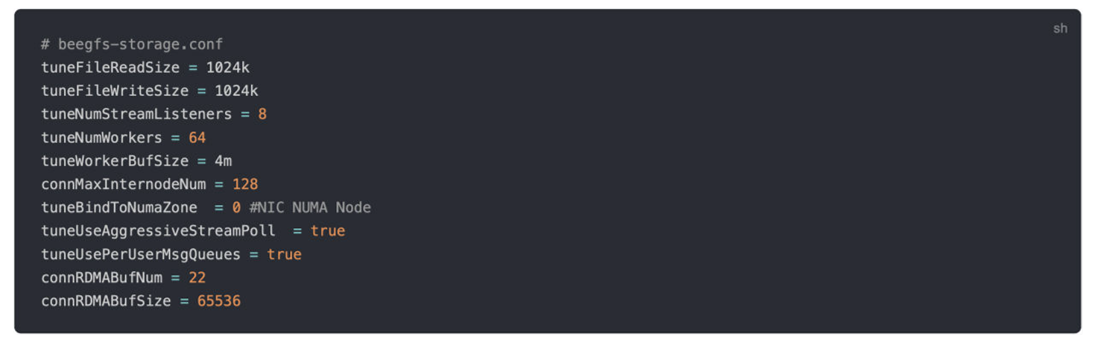

Deployment Details

Introduction

SupremeRAID™

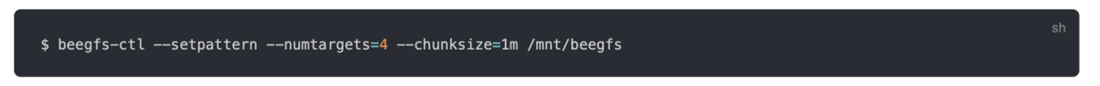

BeeGFS and StorageBench

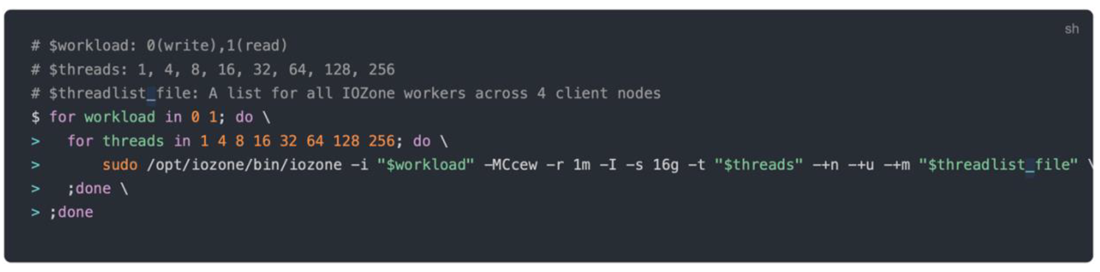

IOzone

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates