Tech-Guide

How to Pick the Right Server for AI? Part One: CPU & GPU

With the advent of generative AI and other practical applications of artificial intelligence, the procurement of “AI servers” has become a priority for industries ranging from automotive to healthcare, and for academic and public institutions alike. In GIGABYTE Technology’s latest Tech Guide, we take you step by step through the eight key components of an AI server, starting with the two most important building blocks: CPU and GPU. Picking the right processors will jumpstart your supercomputing platform and expedite your AI-related computing workloads.

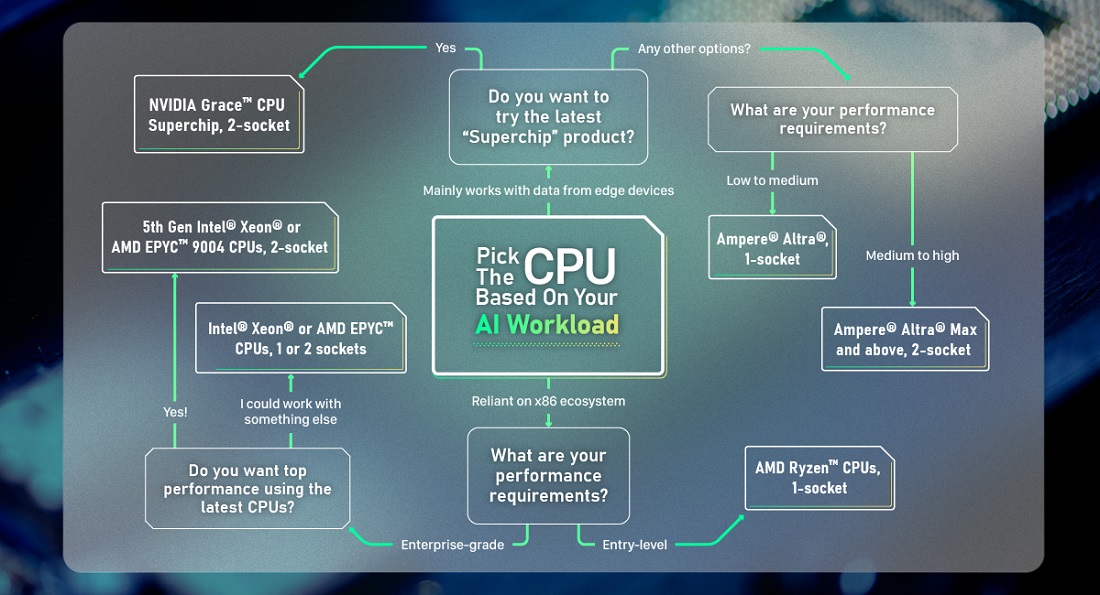

How to Pick the Right CPU for Your AI Server?

While not exhaustive, this flowchart should give you a good idea of which CPU set-up is best for your AI workload.

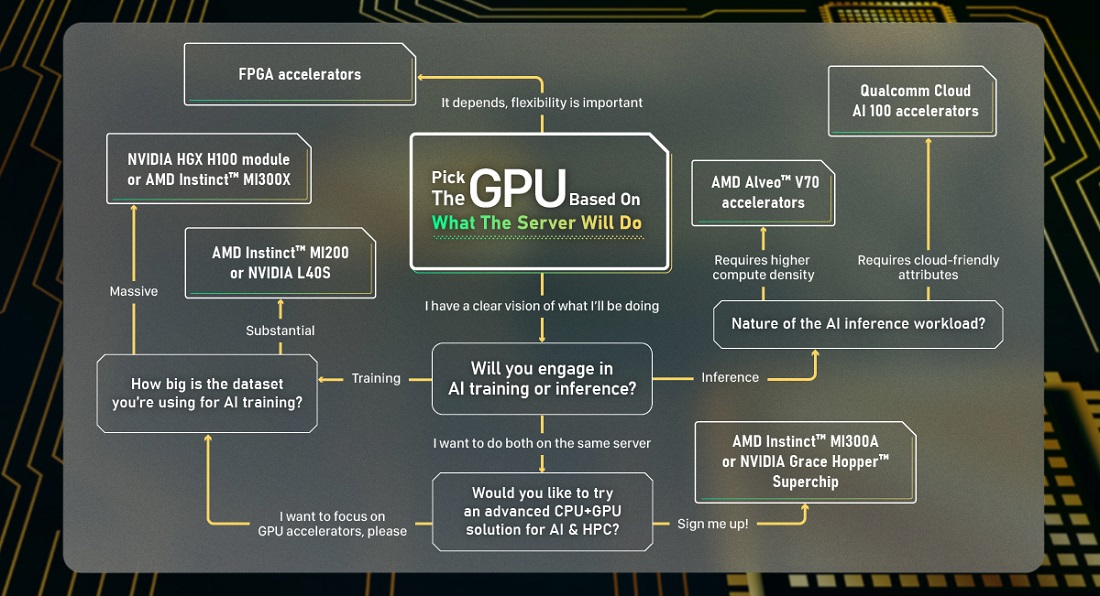

How to Pick the Right GPU for Your AI Server?

As GPUs are key to handling AI workloads, it is important to pick the right options based on your actual requirements.

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

# Artificial Intelligence (AI)

# Deep Learning (DL)

# Generative AI (GenAI)

# Supercomputing

# Cloud Computing

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates