Tech-Guide

How GIGAPOD Provides a One-Stop Service, Accelerating a Comprehensive AI Revolution

Introduction

Challenges in Modern Computing Architectures

Optimized Hardware Configuration

Figure 1: GIGABYTE G593 Series Server

Unique Advantages of the GIGABYTE G593 Series:

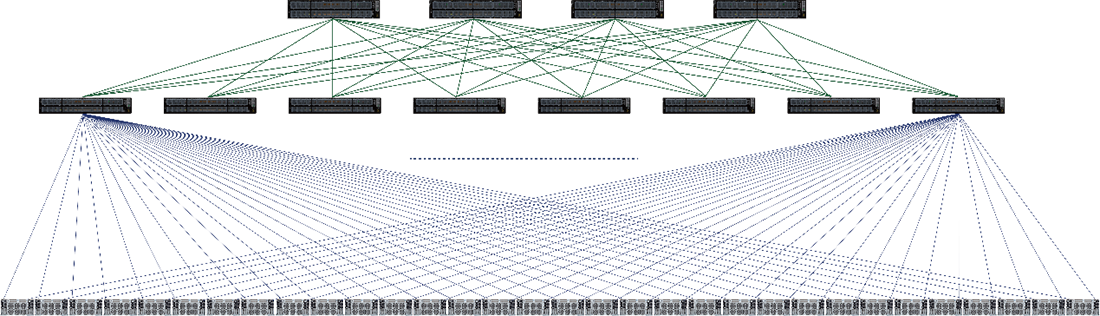

Scalable Network Architecture

GIGAPOD's Network Topology: Non-Blocking Fat-Tree Topology

Figure 2: GIGAPOD’s Cluster Using Fat Tree Topology

Complete Rack-Level AI Solution

Figure 3: GIGAPOD with Liquid Cooling: 4 GPU Compute Racks

Figure 4: GIGAPOD with Air Cooling: 8 GPU Compute Racks

Comprehensive Deployment Process

Figure 4: Deployment Process

AI-Driven Software and Hardware Integration

Conclusion

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

# Artificial Intelligence (AI)

# AI Training

# Machine Learning Operations (MLOps)

# Supercomputing

# PUE

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates