HPC

High-Performance Computing Cluster

High Performance Computing (HPC)

Symmetric Multi-Processing (SMP)

Massively Parallel Processing (MPP)

Heterogeneous Computing

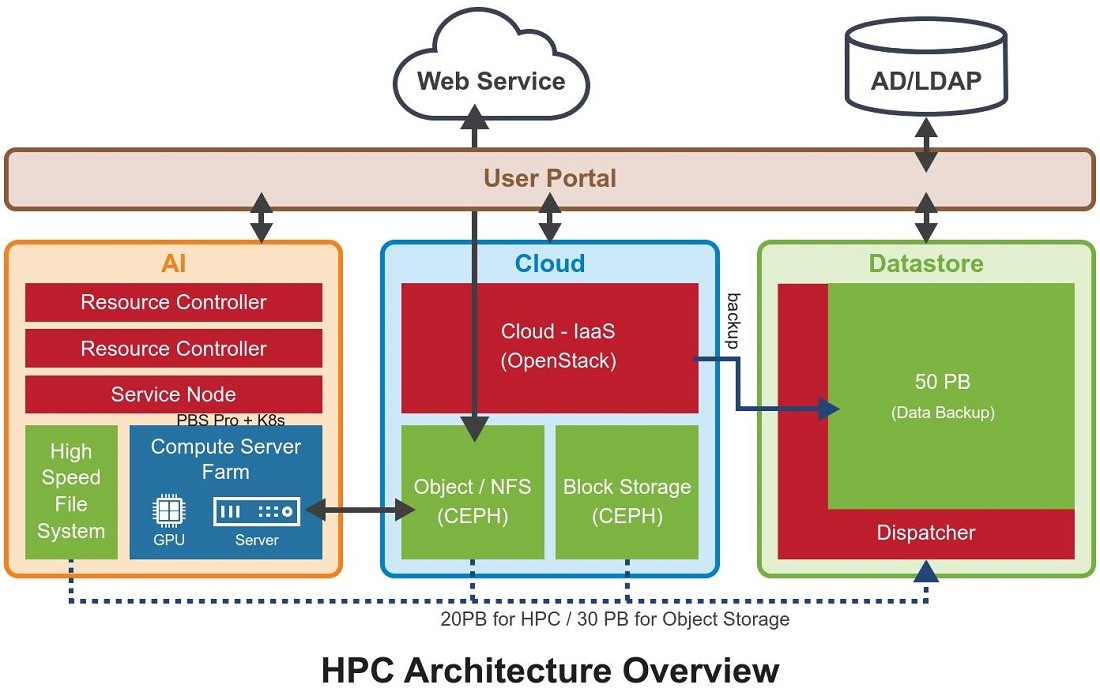

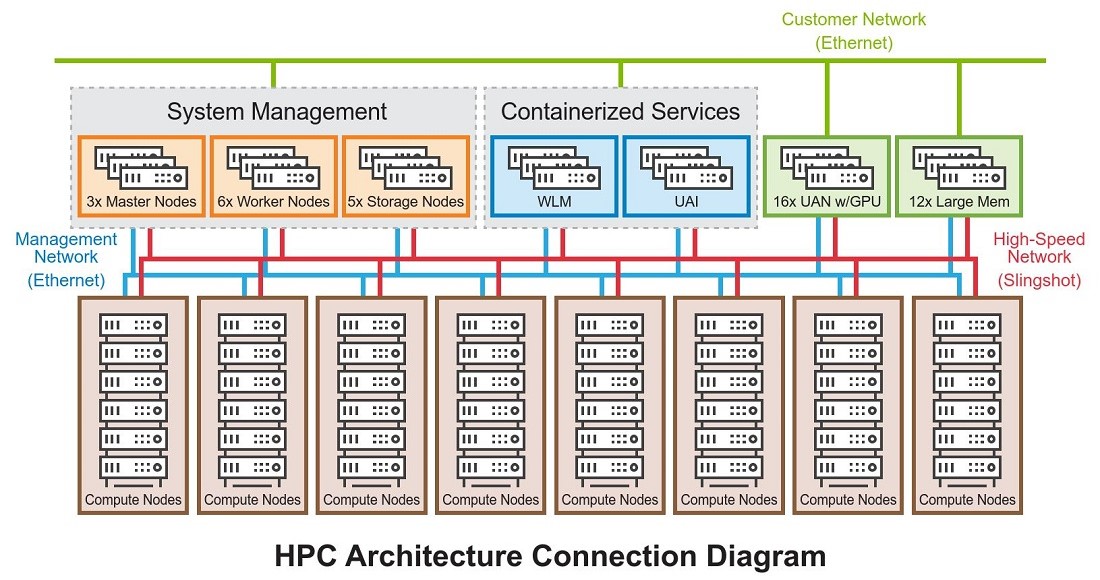

High Performance Computing System

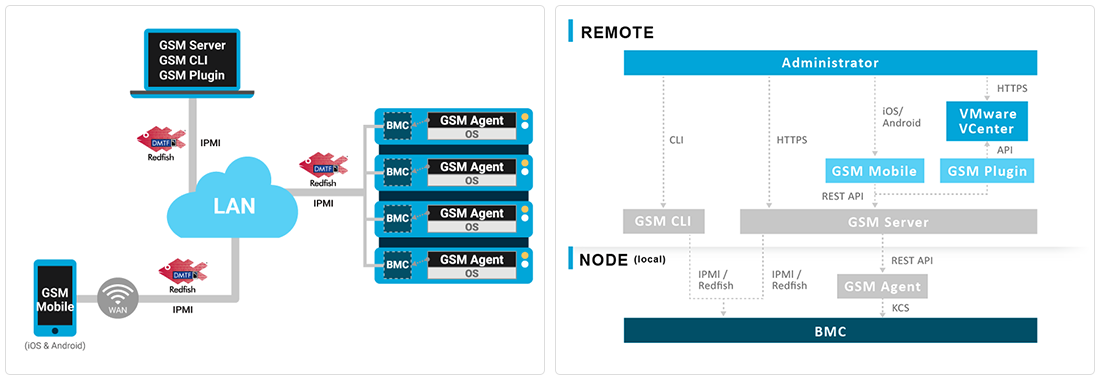

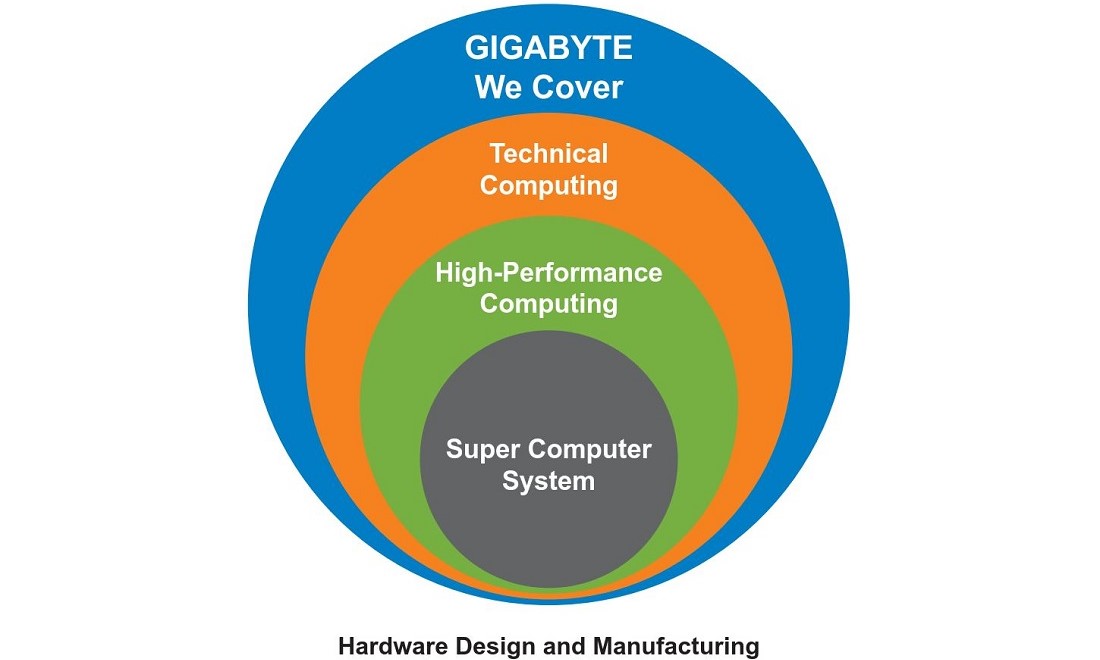

GIGABYTE - Hardware Manager

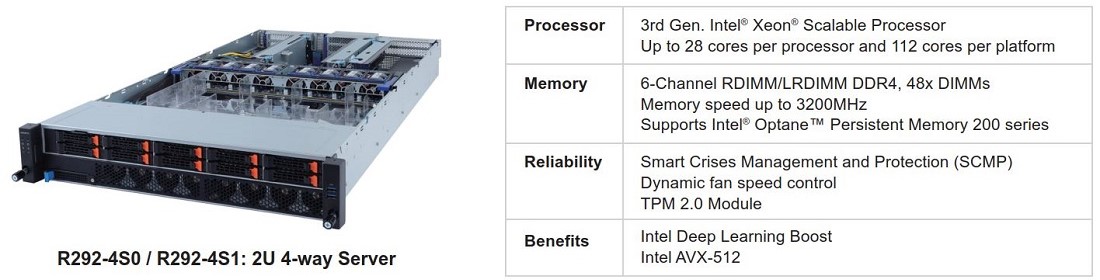

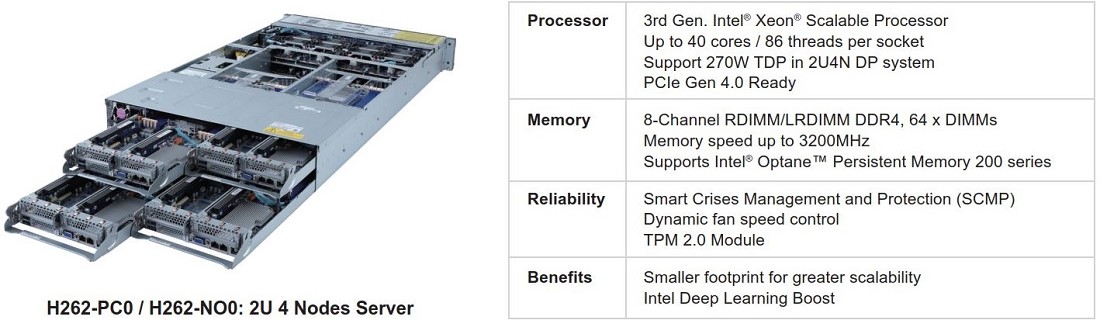

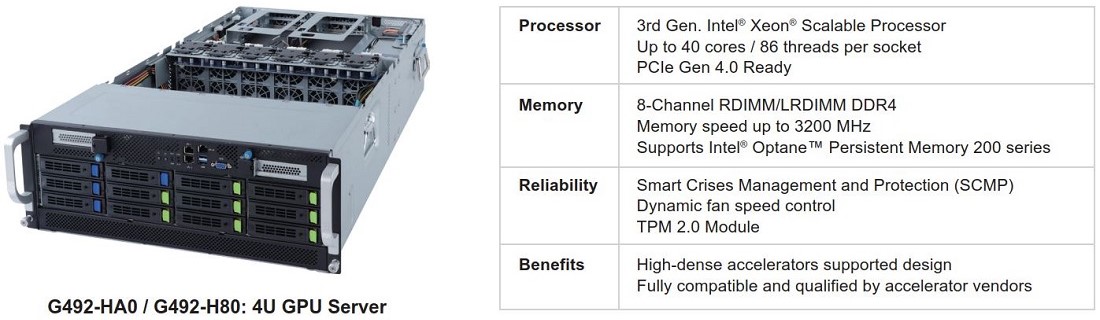

Building a High Performance System with GIGABYTE Servers

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates