HPC

GIGABYTE Powering the Next Generation of HPC Talent at ISC

GIGABYTE's booth at ISC 2019

National Cheng Kung University team working hard

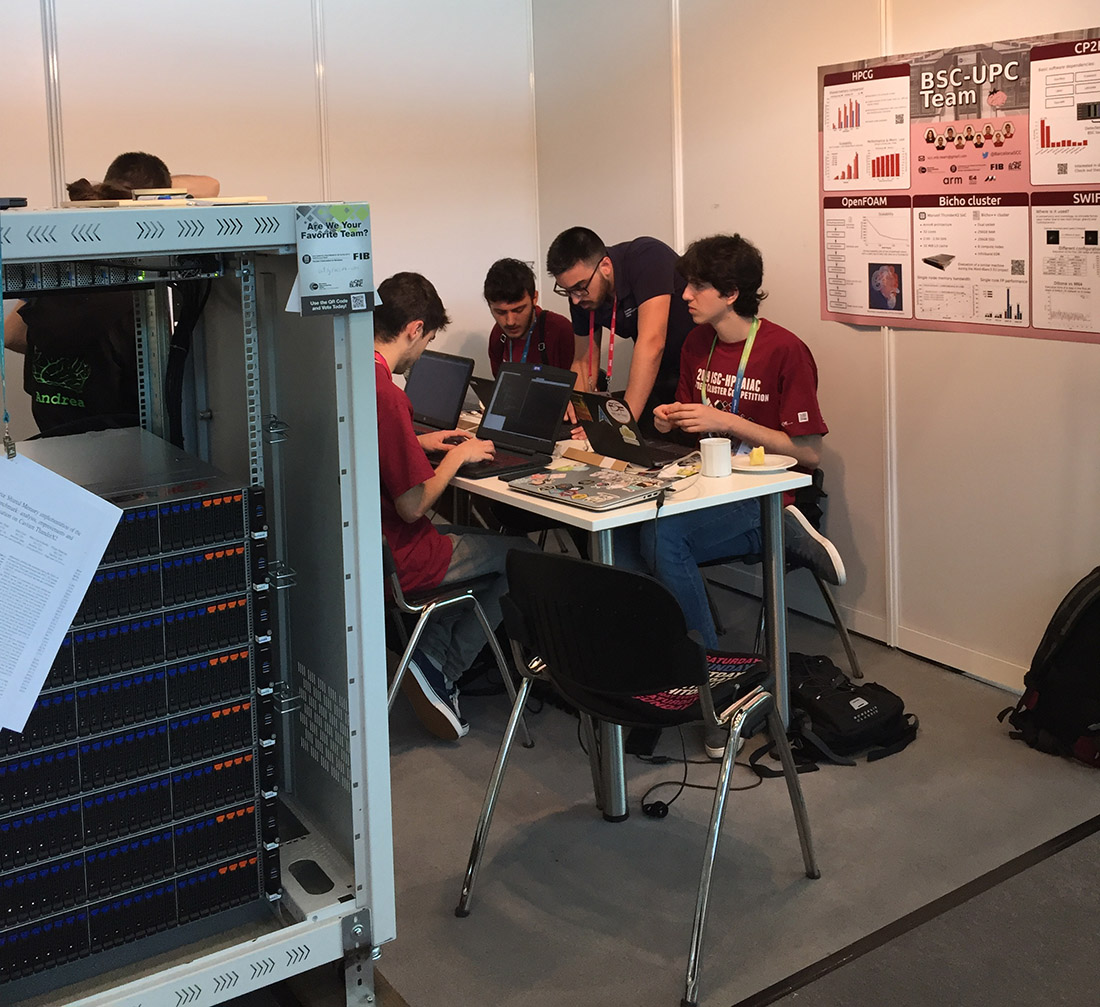

UPC Les Maduixes with their GIGABYTE / Marvell ThunderX2 Arm HPC cluster

Interview with Team RACKlette (ETH Zürich, Switzerland)

ISC19 Meet The Teams interview: ETH

ETH team photo

ETH HPC cluster using 4 x GIGABYTE G291-280

Interview with Team Tartu (University of Tartu, Estonia)

ISC19 Meet The Teams interview: Tartu

University of Tartu team photo

University of Tartu HPC cluster using 4 x GIGABYTE R281-Z94 & 2 x GIGABYTE G291-Z20

Conclusion

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates