Cloud

Cloud Storage

New Generation Cloud Storage Architecture

Cloud Storage

Storage equipment development history

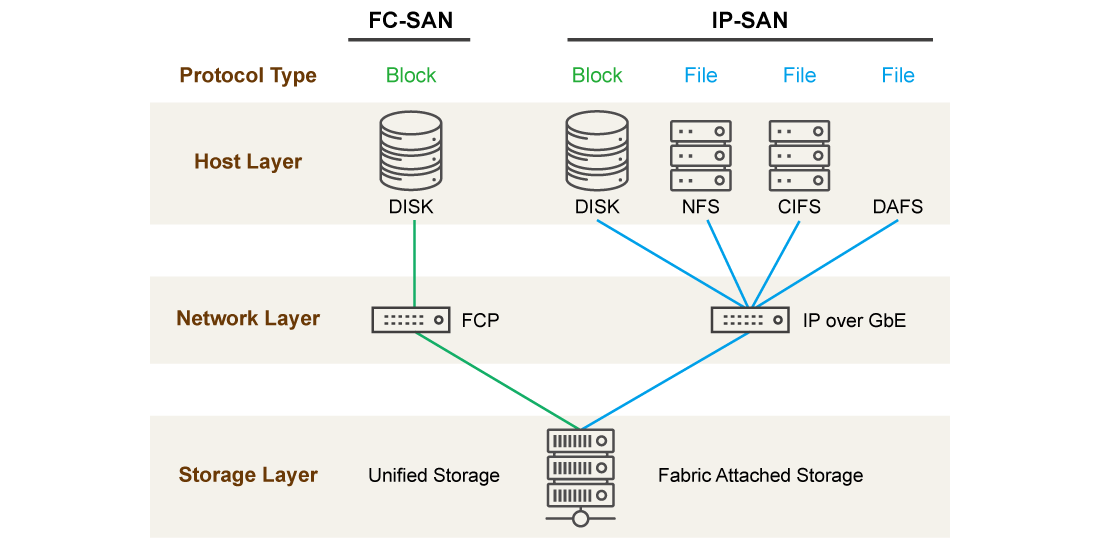

Three Types of Data Storage as a Service (DaaS)

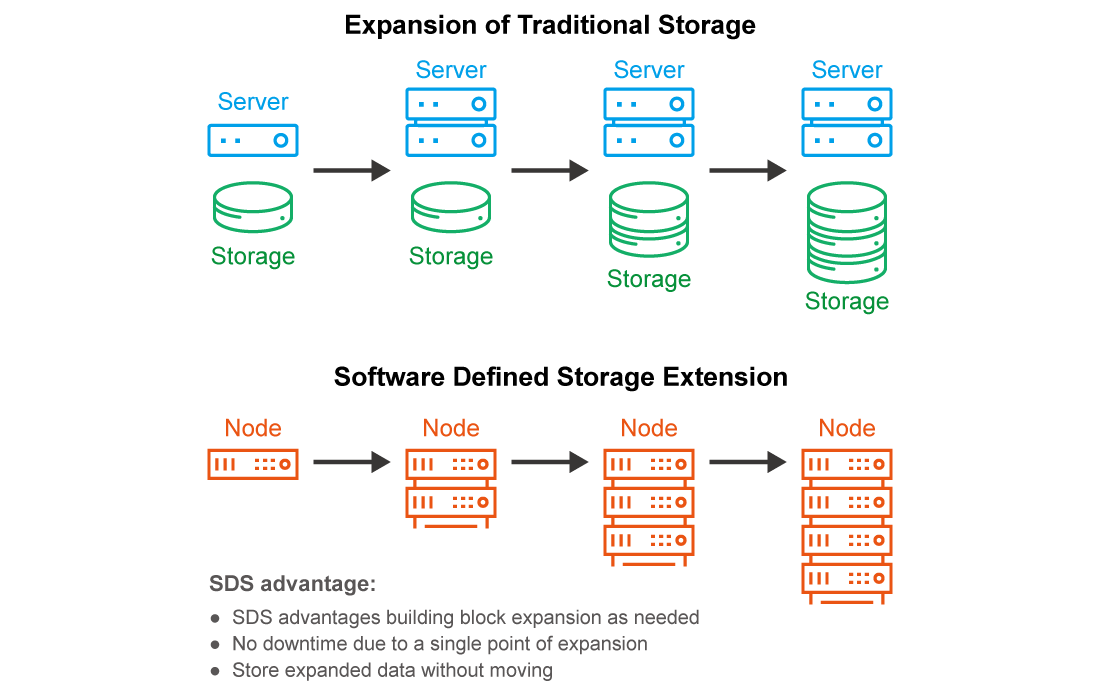

Comparison between Traditional Storage and SDS Architectures in terms of Expansion

Why is SDS better than traditional storage architectures?

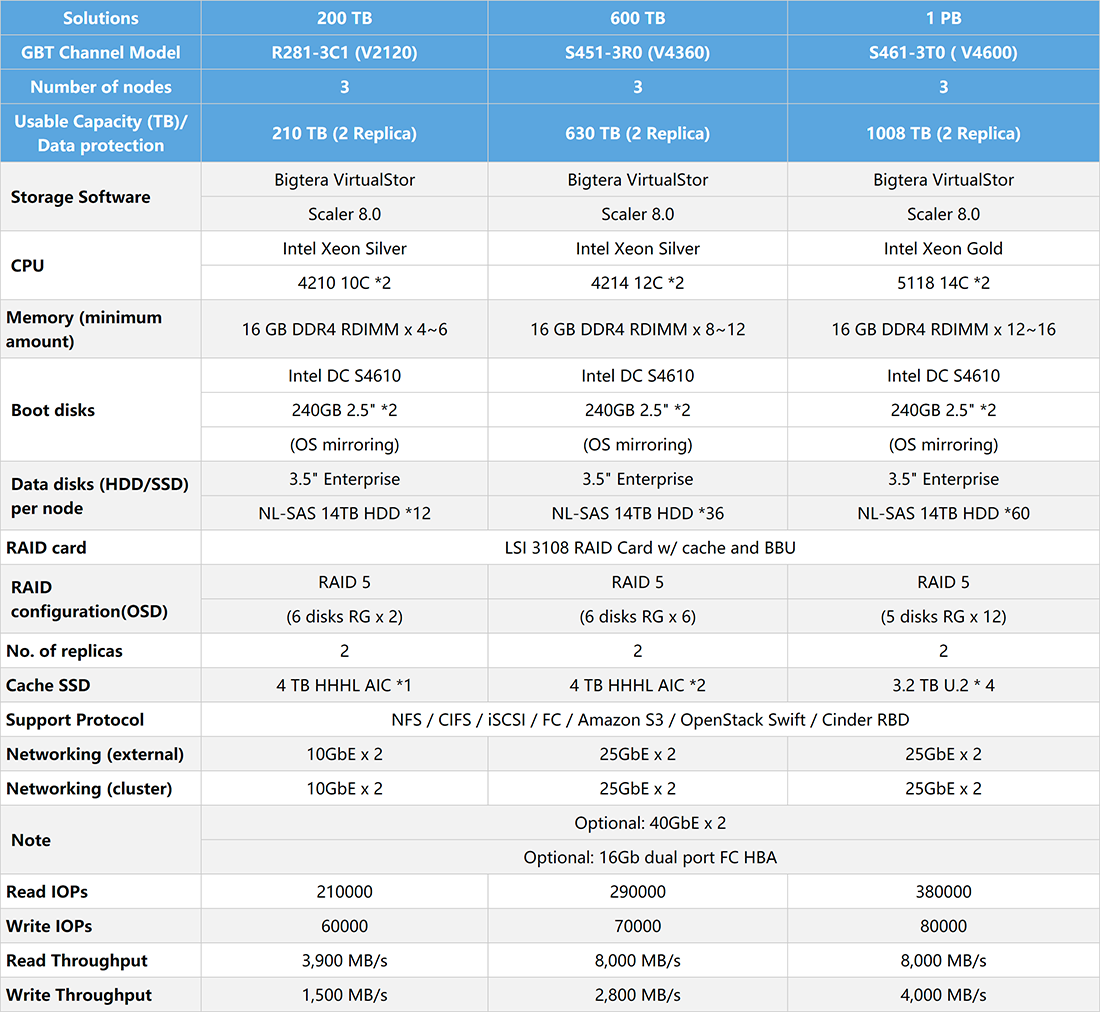

GIGABYTE'S Series Server Storage Solutions

Bigtera VirtualStor Scaler

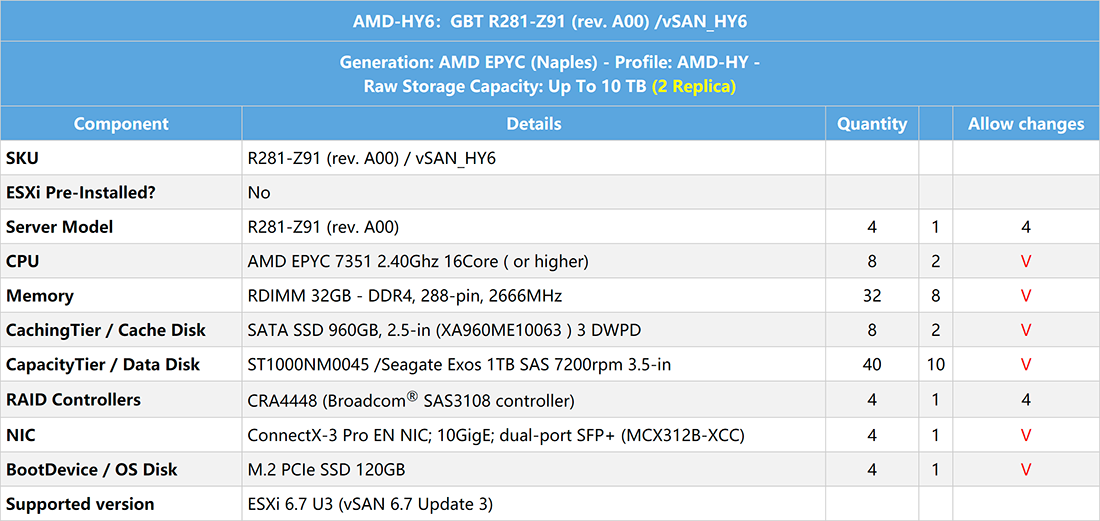

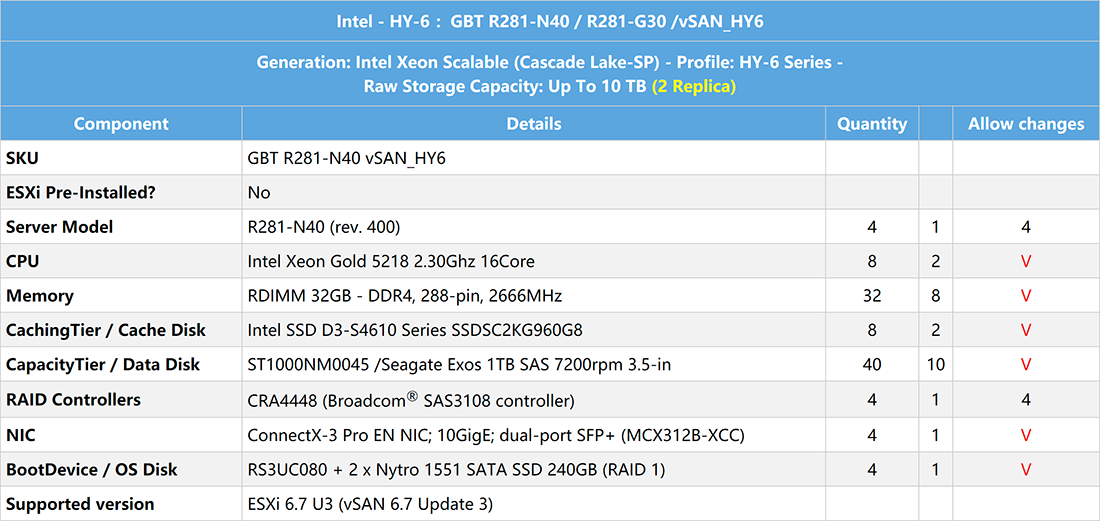

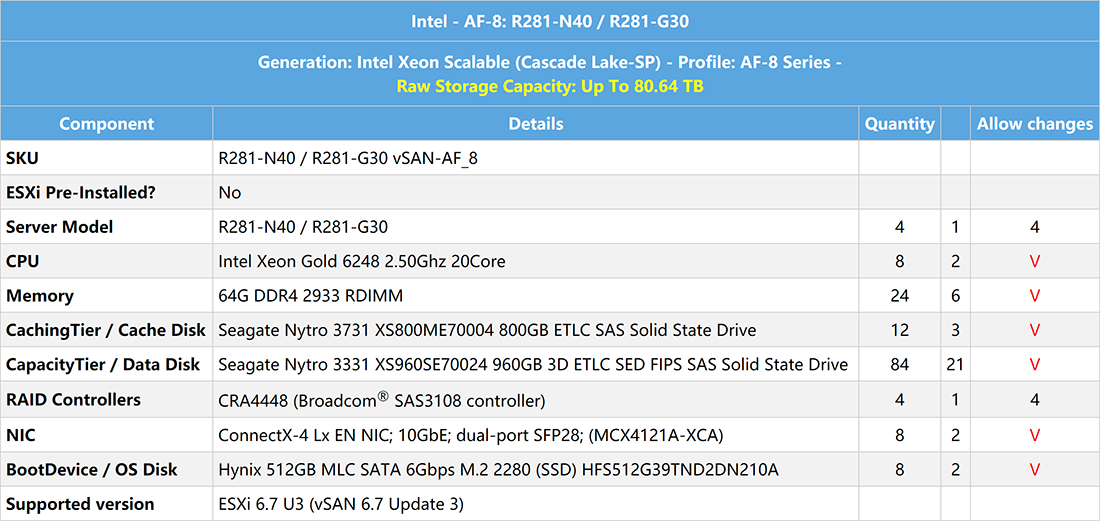

VMware vSAN

Conclusion

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates